What happens the day after? What happens next winter?

Sure – we must find effective treatment and vaccines. Sure – we need to reduce or eliminate the need for on-site monitoring visits to hospitals in clinical trials. And sure – we need to enable patient monitoring at home.

But let’s not be distracted from 3 more significant challenges:

1 – Improve patient care

2 – Enable real-time data sharing. Enable participants in the battle with COVID-19 to share real-world / placebo arm data, making the fight with COVID-19 more efficient and collaborative.

3- Enable researchers to dramatically improve data reliability, allowing better decision making and improving patient safety.

Clinical research should ultimately improve patient care.

The digital health space is highly fragmented (I challenge you to precisely define the difference between patient engagement apps and patient adherence apps and patient management apps). There are over 300 digital therapeutic startups. We are lacking a common ‘operating system and there is a dearth of vendor-neutral standards that would enable interoperability between different digital health systems mobile apps and services.

By comparison – clinical trials have a well-defined methodology, standards (GCP) and generally accepted data structures in case report forms. So why do many clinical trials fail to translate into patient benefit?

A 2017 article by Carl Heneghan, Ben Goldacre & Kamal R. Mahtani “Why clinical trial outcomes fail to translate into benefits for patients” (you can read the Open Access article here) states the obvious: that the objective of clinical trials is to improve patients’ health.

The article points at a number of serious issues ranging from badly chosen outcomes, composite outcomes, subjective outcomes and lack of relevance to patients and decision makers to issues with data collection and study monitoring.

Clinical research should ultimately improve patient care. For this to be possible, trials must evaluate outcomes that genuinely reflect real-world settings and concerns. However, many trials continue to measure and report outcomes that fall short of this clear requirement…

Trial outcomes can be developed with patients in mind, however, and can be reported completely, transparently and competently. Clinicians, patients, researchers and those who pay for health services are entitled to demand reliable evidence demonstrating whether interventions improve patient-relevant clinical outcomes.

There can be fundamental issues with study design and how outcomes are reported.

This is an area where modeling and ethical conduct intersect; both are 2 critical areas.

Technology can support modeling using model verification techniques (used in software engineering, chip design, aircraft and automotive design).

However, ethical conduct is still a human attribute that can neither be automated nor replaced with an AI.

Let’s leave modeling to the AI researchers and ethics to the bioethics professionals

For now at least.

In this article, I will take a closer look at 3 activities that have a crucial impact on data quality and patient safety. These 3 activities are orthogonal to the study model and ethical conduct of the researchers:

1 – The time it takes to detect and log protocol deviations.

2 – Signal detection of adverse events (related to 1)

3 – Patients lost to follow-up (also related to 1)

Time to detect and log deviations

The standard for study monitors is to visit investigational sites once ever 5-12 weeks. A Phase IIB study with 150 patients that lasts 12 months would typically have 6-8 site visits (which incidentally, cost the sponsor $6-8M including the rewrites, reviews and data management loops to close queries).

Adverse events

As reported by Heneghan et al:

A further review of 11 studies comparing adverse events in published and unpublished documents reported that 43% to 100% (median 64%) of adverse events (including outcomes such as death or suicide) were missed when journal publications were solely relied on [45]. Researchers in multiple studies have found that journal publications under-report side effects and therefore exaggerate treatment benefits when compared with more complete information presented in clinical study reports [46]

Loss of statistical significance due to patients lost to follow-up

As reported by Akl et al in “Potential impact on estimated treatment effects of information lost to follow-up in randomized controlled trials (LOST-IT): systematic review” (you can see the article here):

When we varied assumptions about loss to follow-up, results of 19% of trials were no longer significant if we assumed no participants lost to follow-up had the event of interest, 17% if we assumed that all participants lost to follow-up had the event, and 58% if we assumed a worst case scenario (all participants lost to follow-up in the treatment group and none of those in the control group had the event).

Real-time data

Real-time data (not data collected from paper forms 5 days after the patient left the clinic) is key to providing an immediate picture and assuring interpretable data for decision-making.

Any combination of data sources should work – patients, sites, devices, electronic medical record systems, laboratory information systems or some of your own code. Like this:

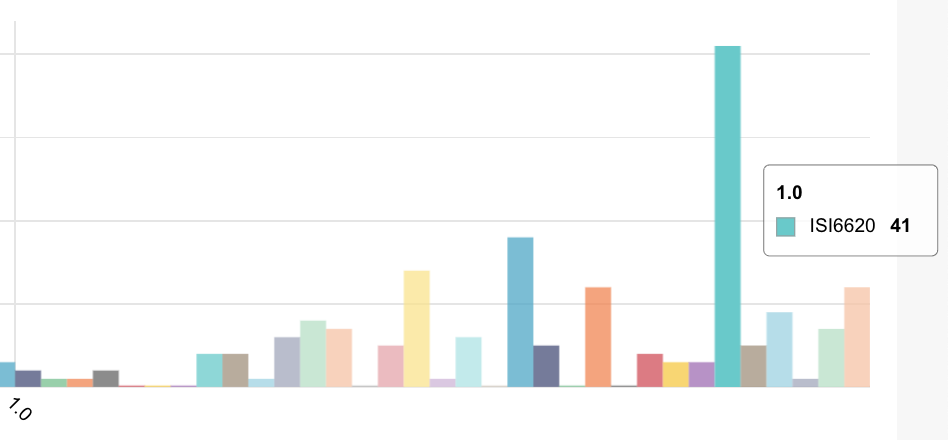

Signal detection

The second missing piece is signal detection for safety, data quality and risk assessment of patient, site and study,

Signal detection should be based upon the clinical protocol and be able to classify the patient into 1 of 3 states: complies, exception (took too much or too little or too late for example) and miss (missed treatment or missing data for example).

You can visualize signal classification as putting the patient state into 1 of 3 boxes like this: Automated response

Automated response

One of the biggest challenges for sponsors running clinical trials is delayed detection and response. Protocol deviations are logged 5-12 weeks (and in a best case 2-3 days) after the fact. Response then trickles back to the site and to the sponsor – resulting in patients lost to follow-up and adverse events that were recorded long after the fact..

If we can automate signal detection then we can also automate response and then begin to understand the causes of the deviations. Understanding context and cause is much easier when done in real-time. A good way to illustrate is to think about what you were doing today 2 weeks ago and try and connect that with a dry cough, light fever and aching back. The symptoms may be indicative of COVID-19 but y0u probably don’t remember what you were doing and with whom you came into close contact. The solution to COVID-19 back-tracking is use of digital surveillance and automation. Similarly, the solution for responding to exceptions and misses is to digitize and automate the process.

Like this:

Summary

In summary we see 3 key issues with creating meaningful outcomes for patients:

1 – The time it takes to detect and log protocol deviations.

2 – Signal detection of adverse events and risk (related to 1)

3 – Patients lost to follow-up (also related to 1)

These 3 issues for creating meaningful outcomes for patients can be resolved with 3 tightly integrated technologies:

1 – Real-time data acquisition for patients, devices and sites (study nurses, site coordinators, physicians)

2 – Automated detection

3 – Automated response