A major data loss event like Hannaford Supermarkets (4M credit card records leaked…) is a black swan as described by Nassim Nicholas Taleb – it has three characteristics:

- Appears as a complete surprise to the company

- Has a major impact to the point of maiming or destroying the institution (note the case of Card Systems or Hannaford whipping out a check for $10M to IBM for a get out of jail free card)

- Event, after it has appeared, is ‘explained’ by human hindsight.

Most large organizations focus on operational event risk – “external threats and failure of internal processes and systems”. This is not surprising, since event risk often implies non-compliance with regulation and events such as rogue trading and accounting scandals have taken a front seat in newspaper headlines. Regulatory concerns are important, but a company must make sure that its economic capital program covers all major risks – and empirical data is beginning to show that operational event risk may not be the biggest concern. Instead, a substantial amount of operational risk may come from data loss threats that are not predictable by traditional risk modeling nor manageable by ERM – enterprise risk management systems.

Companies are very vulnerable to unpredictable, high-impact data loss events and are exposed to huge losses beyond what traditional risk models project.

Why data loss comes as a complete surprise to a company

Data loss events are unpredictable, high impact events without precedent that cannot be forecasted with a risk model.

The assumption in market risk models is that the unexpected can be predicted by extrapolating trends from past observations, especially when these statistics are assumed to represent samples from a normal distribution. Although other distributions may provide better fits to historical data, such as the fractal (for earthquakes) or Lévy distributions (for securities returns) or EVT (for operational risk events) – in all major data loss cases, organizational culture was at the center of losses, and more specifically, a complex interaction of culture, people and rapidly-evolving technology.

Don’t expect loss – implement robustness to data loss events

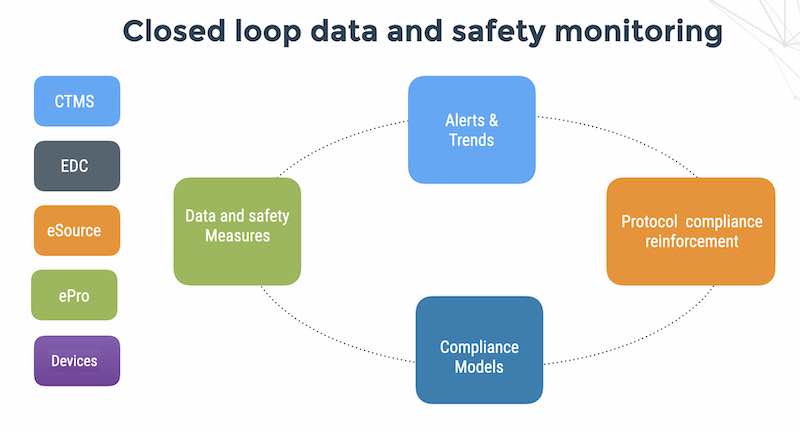

I challenge the assumption that it makes sense to allocate capital against the likelihood of extreme data loss events using EVT or other kinds of risk modeling. Instead, you need to make the company more robust to a major data loss event by implemening prioritized, cost-effective security countermeasures. Since a data loss event cannot be predicted, you need mitigate the potential damage of that a data loss event causes when people exploit the company’s employees, system and process vulnerabilities. In order to decide what security countermeasures are best you need to measure the movement and value of data, and weigh that measurement in terms of a quantitative, practical threat model.

How do you build robustness?

First of all – turn off your phones, don’t talk to your security product vendors and put on your thinking cap. Then, do this 3 step process.

Step 1 – Test two fundamental hypotheses:

Hypothesis # 1: Data loss is currently happening .

- What are the data types and volumes of data leaving the network?

- Who is sending sensitive information out of the company?

- Where is the data going?

Hypothesis # 2: A cost-effective set of robust security countermeasures exists

- What is the Euro value of information assets on PCs, servers and mobile devices?

- What are you currently getting for your security dollar?

- What are your top 5 risk threats in Euros?

Step 2 – Determine prioritized, cost-effective security countermeasures with the threat model

Step 3 – Implement and support security countermeasures. Go back to Step 1